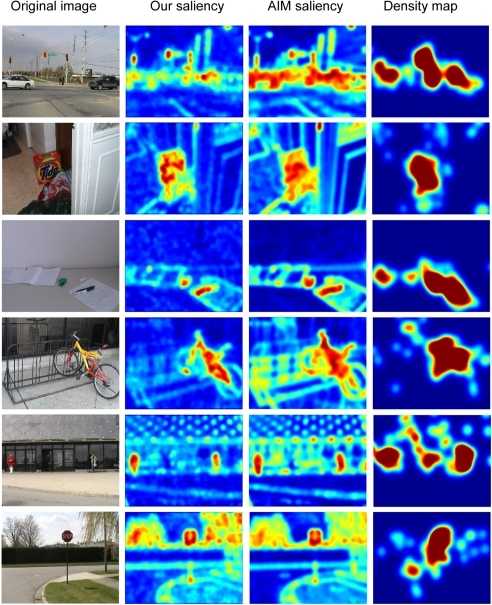

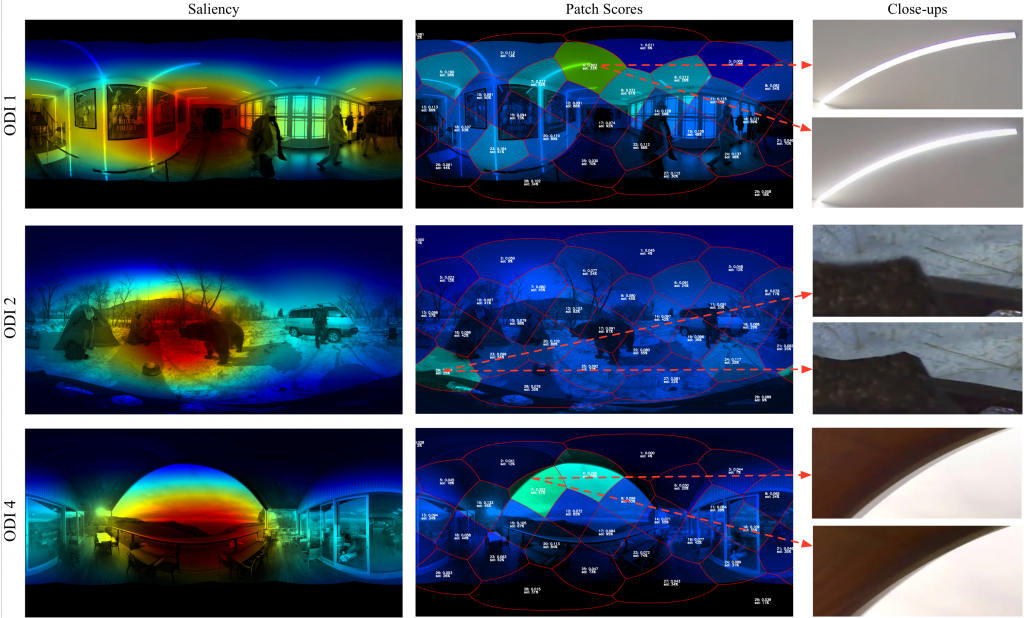

Saliency mapping is a technique with origins in computer vision literature used to change or simplify an image into something that has meaning for humans, making it easier to analyse. Such interpretations can potentially provide insights into the underlying reasoning mechanism of the GCN models.

The interpretability problem we want to tackle is: which nodes, edges and features led to this prediction? And similarly, what would happen if certain edges did not exist, or if features of nodes were different? While applying GCNs to node classification tasks, the model predicts the label for a given target node based on both the features of nodes (including the target node itself), and the structure of the graph. It is essentially asking a counterfactual question: how would the prediction change if these node features were different, or if these edges didn’t exist? My personal view on interpretability of graph machine learning models is that it is a way to tell which node features or graph edges play a more important role in the model’s prediction. The ongoing research on this topic generally relates interpretability to many other objectives such as trust, transparency, causality, informativeness, and fairness. say interpretability is the degree to which humans can consistently predict the model’s decision. define interpretability as the degree to which a human can understand the cause of a decision. This will involve exploring the use of saliency maps to look at whether the model’s prediction will change if we remove or add a certain edge, or change node features.ĭespite extensive interest in interpreting neural network models, there is no established definition for interpretability. Using node classification with graph convolutional networks (GCN) as a case study, we’ll look at how to measure the importance of specific nodes and edges of a graph in the model’s predictions. This time, we’re discussing interpretability: in other words, techniques to interpret the predictions returned by the machine learning model. StellarGraph’s recent article machine learning on graphs introduced graph neural networks and the tasks they can solve. If we can’t understand how or why a prediction was made then it’s difficult to trust it, let alone act on it. There is high demand for interpretability on graph neural networks, and this is especially true when we’re talking about real-world problems.

When it comes to understanding predictions generated by machine learning on graphs, one of the biggest questions we’re left with is why? Why was a certain prediction generated?

0 kommentar(er)

0 kommentar(er)